Optimizing For Approval And Disapproval

How to proceed in a (semi-)adversarial social environment.

Suppose you are a member of a relatively large intellectual community, which has frequent public discussions, a high level of interaction between members, and some mechanism for recording the level of engagement plus feedback on content submitted to the record by its membership.

Usually, new members of such a community spend most of their beginning hours “lurking” rather than contributing material. This is normal - most anyone has to spend time gathering data when being introduced to a new community. But suppose the time comes in which you’d first like to engage with the community. How do you know when to do so? What do you decide to write / talk about first? What do you decide to do after your first round of feedback?

Many communities ask their new members to lurk, too. Usually, you’re told something like “when in doubt, lurk more.” In other words, air on the side of not posting, if you’re not sure if you should yet. Chances are, you’re still not completely up to speed on the material.

Okay, so suppose you’ve been lurking for roughly eight years or so, but you still don’t know if you should post yet. At this point, I would go ahead and say that the data is probably telling you something else. You may have also noticed that it’s not so obvious to tell how long anyone else has lurked, either. In fact, most of the top community members were kind of there the entire time, from the beginning. They just kind of showed up with their first posts receiving wide acclaim.

The first time you feel the urge to post something substantial, it’s at the moment you have constructed (inside your mind, at least) enough arguments that you can build something larger than a comment. Moreover, you feel the urge to post this particular thing because according to your lights, you feel that the community has a blind-spot over a particular thing, which you feel to be kind of odd. You figure your arguments are at least pretty well-articulated. Therefore, if you’re wrong, you’ll receive a missing piece of the puzzle right away. If you’re right, everyone will thank you and you’ll gain lots of new friends and-or poly relationships.

But the most likely outcome - at least from my experience - is that you will not even know if you’re wrong, perhaps ever. You will be very lucky to receive attention at all, positive or negative. But even still, you might get some feedback along the lines of, “Well, this is all definitely completely wrong, but I don’t have the time or don’t know how to explain why. Try lurking more.”

My first piece of advice is that whenever you are trying out a new idea, building it with as many concrete, conceptual components is extremely useful, which means that writing about it at all is a good idea. The more well-built, legible and structural it is, the more localized and information-rich any potential feedback will be. Approvals will strengthen the good parts, disapprovals will weaken only the bad parts, which means they can be morphed into good parts. Therefore, you should be trying to optimize for both approval and disapproval, and then you don’t ever need to be afraid of the latter.1

How to Determine if Approval is "Valid" or "Invalid"

If you can learn how to categorize and deal with all the types of disapproval you might encounter, you’re going to be mentally a lot better off. You can pretty much sub-divide it very simply into two types:

Valid disapproval (That you agree with already).

Invalid disapproval (That you don’t agree with, or seems painful or unpleasant to hear).

We’re defining “valid” here to mean that you consider it valid. Remember that you actually can’t reliably incorporate disapprovals into changes you make to yourself or your knowledge / skills unless you actually agree with and consent to them. Change hurts, obviously, and is at least difficult to perform. But it shouldn’t hurt in the long term, if it is a truly beneficial change to make.2

So, that means that if you ever receive feedback along the lines of, “Well, this is all definitely completely wrong, but I don’t have the time or don’t know how to explain why. Try lurking more,” you probably can’t incorporate that feedback meaningfully - besides the lurking more, which you would already continue to do anyway.

You might be compelled to wonder, in that situation, whether to consider yourself smarter than the person delivering the critique. This is the critical moment (which will repeat itself quite often), because it presents us with a choice each and every time.

The trouble with critiques of that particular form (“definitely completely wrong”) is that they present the receiver with an awkward set of a choices: Either they consider it true, and themselves very stupid or incompetent, or they consider it false and the giver completely rude. That’s why I don’t recommend making those kinds of critiques.

I think you can probably reject those ones, because they would only be made under what I have to conclude are dubious a priori assumptions about the size of the inferential distance between the giver’s and the receiver’s models.3 I always like to imagine myself as a “much smarter person coming across work I consider wrong", and then see what it sounds like to give such a critique. Would I sound like that? The answer is: I can generally always come up with something polite and meaningful, and even offering one remark about one small, independent piece of the work is still helpful and much more likely to be taken. I haven’t failed if I can’t disprove the entire thing.

Fun to Receive Invalid Disapproval

I have a confession to make, though: I really do find it kind of fun to receive lots of invalid disapproval. And that’s where I want to delve into something I said earlier: optimizing for disapproval, in addition to approval.

I also think it’s nice to rude people to allow them to be rude. Because, after a while, you’ll start to be able to invert the disapproval coming towards you. It will actually negatively correlate with the truth, if you can successfully find the hidden blind-spots in your community which causes this to happen. So what you’re really optimizing for is approval plus negative disapproval, which is all positive.

How do you know when you’ve reached this point? That’s a much trickier question, but I’ll do my best to answer it. Not all disapproval matches the extreme example I gave above (although more than you would expect does). So that leaves us with how to deal with disapproval that is much more circumscribed and localized. Once again, I emphasize that you have to go with whatever your evaluation says, for fairly straightforward reasons: You are the only one who can update your model.

But I have a reason for saying this. Even though I am basically saying that you have to go with your evaluation (at the end of the day), we still can choose how much to weight others’ evaluations of us within our own evaluation. Our own judgements can take other’s judgements of us into account. The main problem is that there is going to be more external pressure to weight other people’s judgements of us more highly than our own, but also, that doing so will cause us pain (and also, will cause us to be more wrong, I claim).

That external pressure will, by default, be strong enough to move you toward updating against yourself unless you choose to apply internal, self-supportive pressure. You might want to balance these competing pressures out, somewhat. If you apply no inward supportive pressure at all, we get sort of an “inadequate equilibria” situation. If you apply too much, you will probably face the risk of reputational damage (which, while it may still consist of a fairly large percentage of technically “invalid” disapproval, you might still want to take caution, here).

I see no reason why you oughtn’t to take other’s reputational status into account as well. Someone you consider wise giving you feedback is going to have more of a determination on the outcome than someone you consider unwise is going to. But, keep in mind that most people who contribute to your feedback probably are unwise relative to you (especially about a topic that you choose to contribute to, which makes you a relative expert compared to the majority of laypeople). This is another damper on how much you should incorporate or react to negative feedback.

Disapproval Categories

Lets talk a bit about the the types of feedback one can receive on all or part of their work, and how one should react to them:

You have lack of rigor in your arguments.

You have defined wholly-new or novel terminology.

It's just difficult to read or hard to parse in general.

One specific component seems false or unjustified.

It needs segments or sections to be understood.

There are many typographical deficiencies that place it “under the bar” for acceptance.

(Many more than these, undoubtedly.)

You are actually most concerned about whether your thesis (or theses) are incorrect. Your goal is then to transform your work or expand upon it until it begins to receive comments that are fruitful to that end. Even comments that directly argue that your theses are false are better than any among the above bullet-pointed list.

Invalid disapproval would consist of anything of the form that cite something from the above list (or something like it) and simultaneously claims or insinuates that your thes[i/e]s are wrong due to a failure of one of those types. Any of those types of disapprovals can be judged to be locally correct, however, it is doubtful that the receiver of such a criticism will agree that it renders the entire work broken.

Fake Justifications

Why can we expect that a large amount of invalid disapproval will be generated? Because most prestigious communities implement some kind of quality bar in which they expect (and attempt to attract) more people and more submissions than they actually intend to pass their bar.

As long as a community advertises its prestige in some way, communicates its activities to outsiders, enables outsiders to interact with it, and pressures them to try to join, you will have dynamics in which those communities will have to produce “fake justifications”4 for why certain people and contributions cannot be accepted.

The basic reason for that is this: Suppose a prestige-based community intents to elicit 1000 submissions for some task and only accept 100 of those submissions. No matter what “bar” it can possibly devise, it is always possible that it receives more or less than whatever number it pre-committed to accepting that are technically above the “bar.” Therefore, there will always be some number of submissions that were either accepted or rejected for an ultimately indiscernible reason.

But imagine that those prestige-based communities consistently and honestly reported for each submission that was rejected that it was selected randomly among the total submissions to be rejected until they were down to 100. The message that would be sent to those who submitted them would be “Great! Maybe try again next time, or send it to one of our peers!” So this ends up granting prestige to those people and perhaps other peer institutions. If those prestige-based communities are concerned about their relative prestige rankings, or with maintaining a veneer of exclusivity, they might be incentivized to try and restrict the amount of prestige granted to outsiders, even implied prestige.

That means they might choose to supply reasons for their rejections (as well as spurious acceptances) that differ from the true reason. Prestige is a very actively believed-in thing, so those reasons may even need to be performatively believed-in as well.

This is why we have some universities that are “saltwater economics schools” and some that are “freshwater.” Academia is centuries-old, and so there has been enough time already for some academics to have been unfairly excluded from some schools, for those schools to have come up with ideologies to justify that, and for those excluded academics to form their own, competing schools.

Disbelieving In Moloch

I think it makes sense, if you’re an altruist, to want to lower the prestige of communities that don’t deserve it, and to raise the prestige of ones that do.

I think that can be done by actively working on creative and intellectual tasks as one would normally be inclined to do, and by being socially active as well, submitting work to many prestige-based groups and interacting with them frequently. This will cause undeserved-ones to have to create more fake justifications if they choose not to support you, which will be more likely to be recognized and therefore lower their prestige. They have to actively attract those who they know they will also reject in order to implement their kind of exclusive prestige, so the better your submissions, the lower the quality their fake justifications will need to be. And those fake justifications, because they need to deliver some kind of prestige payload to both you as well who gave it to you, are going to be open to the public. And this is yet another reason to optimize for both approval and disapproval.

When you get hit with a fake justification for being disapproved-of, you become “unfairly maligned.” Unfairly Maligned carries its own kind of status with it, that develops into a fairly large amount of prestige later, which makes being unfairly maligned pretty worth it.

It’s not un-virtuous to try and be unfairly maligned. This is because you don’t need to do anything egregiously offensive (or even close) to do this. Generally speaking, you can gain this status simply by being honest and yourself.

In fact, I think it’s probably healthy for most people to always see themselves as unfairly maligned to a certain degree. This is because it generates a lot of internal motivation to keep doing what you’re doing because it’s the right thing to do. You also gain the status of someone who does the right thing even when it’s not popular, and perhaps also someone who is fairly prescient, and when society is divided on an issue, you can tell who is right earlier than most people can.

So being unfairly maligned, I would argue, carries with it a fair amount of both personal as well as social (altruistic) utility. Therefore, it does pretty much pay off in the long run to make a personal sacrifice in the short term by taking on some risk of obtaining some (temporary, or partial) negative status.

This depends on you being virtuous. If you are doing what you honestly think is right, and voicing your ideas on how that ought to be done, then I’m saying that you can take the risk of negative feedback there, and it will be made up for. It’s not magic, either. There are explicable social mechanics that cause that to happen.

My models for those social mechanics are still being developed, but as they already justify what I’m saying here, I thought it would be good to share those, since it should make it a bit easier and more pleasant for anyone who wants to follow a similar path.

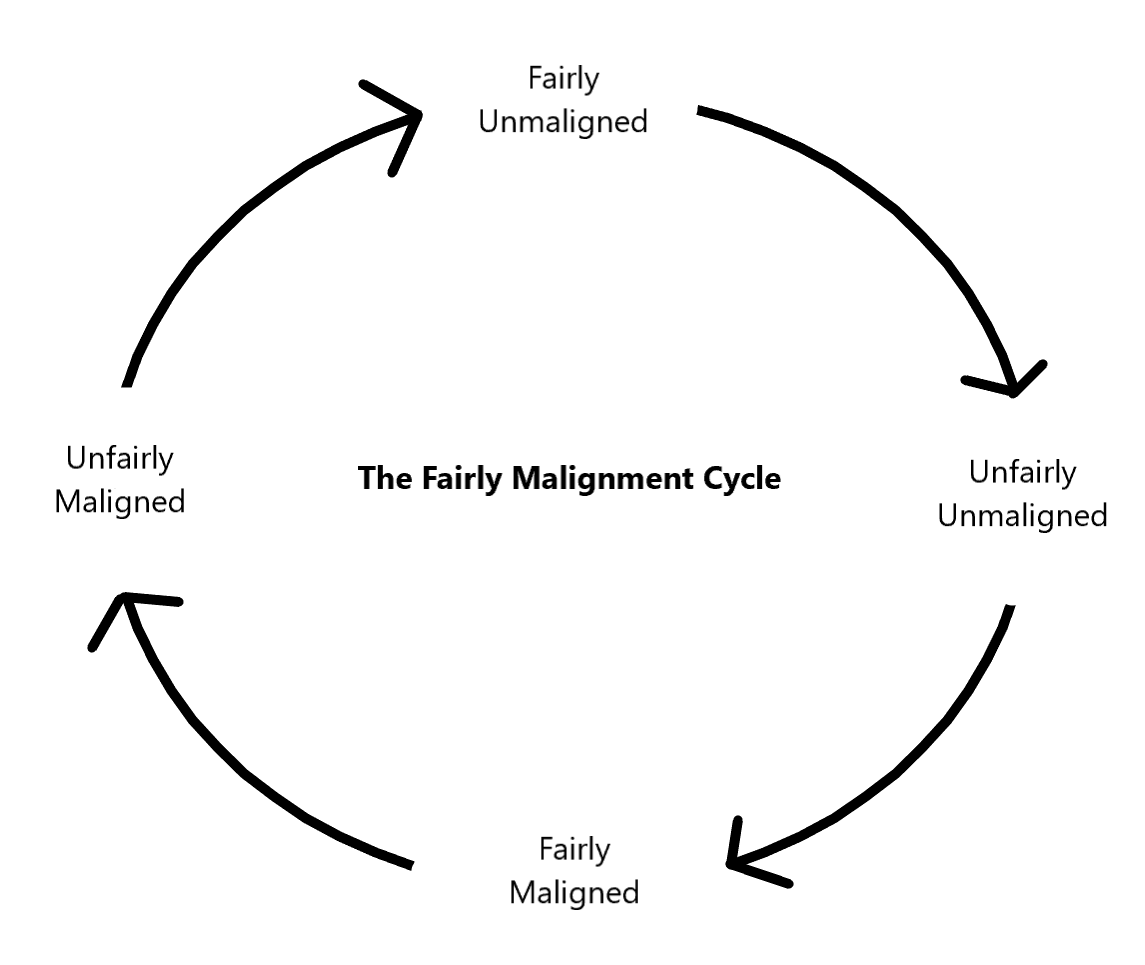

The "Fairly Malignment Cycle"

We have something that kind of resembles Saṃsāra. It also is powered by something that resembles karma, too, because we are dealing with the consequences of actions that can be seen as virtuous or unvirtuous. We’re calling disapproval “malignment” here, and the primary driver of this cycle, because this cycle is one where the primary driver of activity is caused mostly by pain, and the desire to end that pain.

People, as well as ideas, ideologies, symbols, intellectual property, brands, trademarks, corporations, nations, flags, colors, and other signs and markers for identity are the objects of malignment (and unmalignment, please excuse the double-negative). These are the things that go through the cycle.

When people criticize each other, those criticisms often come down in the flavor of moral disapproval, rather than just as a matter of fact. It is usually hard to separate moral disapproval from factual disapproval. For example, when politicians make mistakes, it is common for their opponents to blame character flaws that make those politicians wholly unacceptable or dislikeable, rather than the complexity of the issues they faced or circumstantial details that made their tasks difficult.

Your IQ probably does not change much over time, and you probably know what it is, and how relatively capable this makes you. However, you are probably acutely aware that whenever you make a mistake in public, it feels as though everyone has suddenly updated their personal estimates of your IQ downward. And also, whenever you succeed at something, you feel like it doesn’t move your IQ much at all.

What is the reason for that asymmetry? It is probably simply that there are more people who view your status increasing relative to their own as threatening compared to the number of people who see it as helpful for their own status.

This asymmetry is likely to diminish somewhat as soon as it starts to become a liability to side against you. Siding with you also begins to have its advantages at this point, too.

So in fact this is also somewhat of a “Contra Moloch” as well as a “Contra Inadequate Equilibria” here, as well. Moloch is said to be powerful only when it is widely believed by all that they will face punishment from their peers by defecting from the group, and unwillingness to punish their peers who defect is itself seen as a defection. Moloch is only as powerful as the belief in him is.

Defection from Moloch just won’t be seen as being morally wrong, is the thing here. Just as non-defection from Moloch won’t ever be seen as virtuous, per se. At best, it will only be seen as game-theory-folk-theorem-level “rational.” Therefore, Moloch-believers systematically overestimate how much status they have to gain / lose whenever they are faced with the choice to defect or not.

Moloch-believers tend to push down felt, emotional perception of status in favor of described or “score-based” metrics for status, typically by believing that the former is not as “real” as the latter. Or even that the former is more “political” or “ape-brained” than the latter. Therefore, when they are judging the risks of defecting based on personally-felt virtue or cold, hard, game-theory-folk-theorem logic, they assume that the fuzziness or “unreality” of the first kind of status means that it will also not carry over into subsequent judgements. They underestimate the degree to which the constant effort they need to apply on their own felt internal evaluations of status (or to believe that they are less valid than belief in Moloch is) will affect the subsequent measures of status among defectors and non-defectors.

Moloch non-defectors become something called “Unfairly Unmaligned” by choosing to punish a defector just because that’s what the rules say or because they don’t want to become unfairly maligned themselves. When it becomes obvious that they made a bad bet, or that they had nothing to lose by defecting, they might become maligned themselves (becoming fairly maligned).

The only “fair” amount of malignment is one-time criticism per instance of fairly-malignable act. Social exclusion and ostracism are always unfair malignment. These are arguable assertions, of course, but I feel fairly confident in them. I do think it is important to consider our initial, gut-level reactions to hearing these things.

The full cycle is shown below5.

When people become fairly maligned enough, they change. They start afresh, which puts them at unfairly maligned. So “unfairly maligned” is a bit like youth6, “fairly unmaligned” like adulthood, where one enjoys the fruits of their labor; “Unfairly unmaligned” like old age, where one has status and power but no longer quite the same abilities nor incentives to keep doing pro-social things; And “fairly maligned” like death - where even you agree your own body is not performing as well as it needs to.

You’ll probably be motivated to take on the task of politely, rigorously and honestly engaging with things you consider “unfairly unmaligned” and quite possibly receive a far larger degree of backlash than you bargained for. But I don’t think you’ll have to worry about unintentionally causing something to be unfairly maligned due to your actions, because status is very explicit and visible, and typically we will be pointed towards things that are very safe and well-defended relative to ourselves. Therefore, you cause it to malign itself by inviting you to come over so it can punch downward. Not all of our actions occur in a social vacuum, where you have more free will than someone who is higher-status than you. The converse is far more likely to be true.

Denouement

I encourage basically everyone to try and stay unfairly maligned. I encourage those with high status in their communities (especially who have the bureaucratic authority to determine membership status of people) to consider that if they want to avoid undue risk (which they often do), they should place more emphasis than they are now on the risk that unfair malignment brings upon themselves and their establishments.

And I know that they aren’t already doing this, because they often say that they need to “air on the safe side” by accepting that their defense mechanisms may detect many false positives and that these are far less costly than false negatives.

They might want to update their models on, e.g., the story / drama of Twitter’s approach to censorship and how Musk’s approach has differed from that, and the consequences that each of those approaches have had so far.

This is the case where disapproval is valid. But I didn’t say one need assume all disapproval to be valid. However, in that case one needn’t be afraid of it either.

And honestly, I am not even sure they should hurt in the short term. But use your own judgement.

(Unproven) conjecture: Rude people are probably more wrong than not-rude people, especially in the moment of rudeness.

This is not quite the same thing as described in Fake Justification. Where I differ from Yudkowsky is that I assign more blame to people being socially motivated to refuse to update their positions than I do to people being individually cognitively biased in the ideal scenario of no-strings-attached individual free-thinking cognition.

The cycle as depicted is not meant to imply that everyone experiences exactly the same things in exactly the same order and amount / magnitude. There are reasons to behave pro-socially that can make, e.g., being “fairly unmaligned” a longer and better experience.

Which could also correspond to, if not biological youth directly, a newcomer to a community, a new project or ecosystem of ideas, or something being incubated but not yet matured.